Views: 0

A bad prompt feels like giving directions by pointing vaguely toward the horizon. You might arrive, but you will probably waste time, take wrong turns, and argue with yourself about whether you missed the exit.

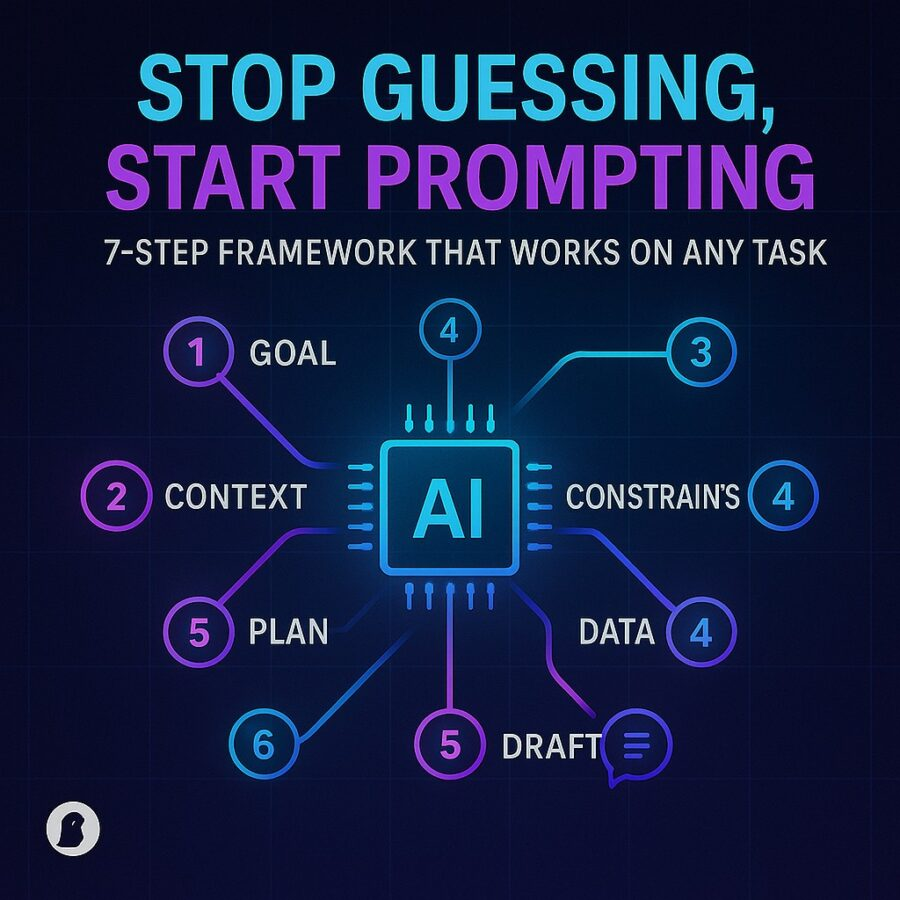

A good prompt is closer to handing someone a clear lab worksheet: goal, materials, constraints, method, and what “done” looks like. That is the mindset behind the SEO keyphrase Stop Guessing, Start Prompting 7-step framework that works on any task.

OpenAI’s own guidance keeps repeating the same theme: be clear, be specific, and iterate based on the output. (OpenAI Help Center) Anthropic has been pushing a similar idea, but with stronger emphasis on organizing context so the model stays oriented as tasks get longer. (Anthropic)

Below is a seven-step framework you can use for writing, coding, planning, studying, marketing, or troubleshooting. You can run it in one prompt, or split it into two passes when the job is bigger.

Step 1: Declare the finish line

Start by stating the outcome in one sentence. Not the topic, the deliverable.

Good: “Create a 10-bullet checklist I can follow.”

Better: “Create a 10-bullet checklist I can follow, plus a 3-item ‘common mistakes’ section.”

OpenAI’s prompt guidance is blunt about this: specificity around outcome, length, and format improves results. (OpenAI Help Center)

Prompt: Deliverable: Write a 600-word blog section that explains the concept to beginners, includes one analogy, and ends with a 5-item checklist. Topic: [TOPIC].

Why this works: you reduce “interpretation space.” The model has fewer ways to be “technically responsive” while missing what you wanted.

Step 2: Provide the minimum context that changes the answer

Context is not “everything you know.” Context is what would make a correct response differ from a generic one.

Use three buckets:

- Audience: who will read or use this

- Situation: what is happening in the real world

- Constraints: what must be true, what must not happen

OpenAI explicitly recommends providing relevant context and placing it where the model can use it effectively. (OpenAI Platform) Anthropic’s context engineering framing is similar: careful context organization beats random extra text. (Anthropic)

Prompt: Context: Audience is non-technical small business owners. Situation: they are choosing a tool this week. Constraints: no jargon, short paragraphs, and no claims that require browsing unless you say “needs verification.”

A professor’s analogy: context is like the constants in a physics problem. If you do not state them, everyone solves a different problem.

Step 3: Assign a role, but tie it to behavior

Role prompts help, but only when they change what the model does. “Act as an expert” is vague. “Act as a careful reviewer who flags assumptions” changes behavior.

OpenAI’s best practices emphasize clarity about the style and approach you want. (OpenAI Help Center)

Prompt: Role: You are a senior engineer and a strict reviewer. Behavior rules: (1) explain tradeoffs, (2) flag hidden assumptions, (3) include a quick sanity check section.

This step is about personality only in the sense that it shapes habits: cautious, concise, example-driven, or skeptical.

Step 4: Give the model the “materials” to work from

If you want accurate output, provide source text, data, or a small set of facts. Otherwise you are asking the model to improvise, which is fine for brainstorming and risky for specifics.

When you cannot provide materials, instruct the model to separate what it knows from what it is guessing. OpenAI’s prompting advice supports iterating and refining, especially when the first attempt misses because inputs were incomplete. (OpenAI Help Center)

Prompt: Materials: Here are my notes (verbatim). Use only these notes for facts. If something is missing, label it “Unknown” and ask one question at the end. Notes: [PASTE].

Think of “materials” as ingredients. If you want bread, you can improvise spices, but you cannot improvise flour.

Affiliate Link

See our Affiliate Disclosure page for more details on what affiliate links do for our website.

This offer is for NEW USERS to Coinbase only!

Alt+Penguin’s Referral link details for NEW Coinbase users

When using our referral link (click here or the picture above) and you sign-up for a NEW Coinbase account & make a $20+ trade, you will receive a FREE $30 to your Coinbase account!

Step 5: Specify a method when the task needs reasoning or tools

For easy tasks, you can skip method. For messy tasks, method is the guardrail that prevents wandering.

Two research-backed prompting methods are worth knowing:

Chain-of-thought prompting: asking for intermediate reasoning steps can improve performance on complex reasoning problems, as shown by Wei et al. (arXiv)

ReAct prompting: interleaving reasoning with actions (like tool calls or retrieval) helps models plan, adapt, and handle exceptions. (arXiv)

You do not need to demand long internal monologues. You do need to demand a structured approach.

Prompt: Method: (1) List unknowns, (2) propose 2 solutions, (3) compare them with pros and cons, (4) choose one and justify, (5) produce the final output. Keep the planning section under 120 words.

If the task involves web research, set the research bar up front. The OpenAI GPT-5.2 prompting guide recommends explicitly stating how you want search and synthesis handled, including resolving contradictions and stopping when marginal value drops. (OpenAI Cookbook)

Prompt: Research bar: Use 3 to 6 credible sources, resolve conflicts, and cite sources for key claims. If you cannot verify, say so plainly.

Step 6: Lock the output format so it cannot “wiggle”

Most prompt frustration is formatting drift. You asked for a checklist and got an essay. You asked for code comments and got a lecture. Format locking fixes that.

OpenAI’s guidance repeatedly calls out specifying format and length. (OpenAI Help Center)

Use explicit templates:

Prompt: Output format exactly: Title (one line). Then 5 short paragraphs. Then a checklist with 10 items. Then “Questions” with up to 3 questions. No extra sections.

If you are building agent workflows or piping outputs into tools, structured formats matter even more. A strict shape makes validation possible, and validation makes systems safer.

A simple classroom analogy: if you are grading, you want students to answer in the same layout so you can check work quickly.

Step 7: Add a feedback loop so prompts improve instead of repeating mistakes

Prompting is not a one-shot talent. It is closer to coaching. You run a drill, review the tape, then adjust.

OpenAI explicitly recommends iterative refinement: start with a draft prompt, review the response, and refine. (OpenAI Help Center)

Here is a tight iteration pattern you can reuse:

- Ask for the output

- Ask for self-critique using your rubric

- Ask for a revised output that fixes the critique

Prompt: Now critique your answer against these criteria: correctness, clarity, completeness, and usefulness. List 5 improvements. Then rewrite the answer applying those improvements. Do not add new claims that require new sources.

This step is how you stop “guessing.” You stop hoping the first attempt lands. You treat the first attempt as a draft.

The full 7-step prompt template you can copy

Below is a single prompt you can drop onto almost any task. Replace bracketed parts.

Prompt: Deliverable: [WHAT YOU WANT PRODUCED]. Success looks like: [MEASURABLE QUALITIES]. Context: Audience: [WHO]. Situation: [WHY NOW]. Constraints: [LIMITS, MUSTS, MUST-NOTS]. Role: [ROLE] with behavior rules: [2 to 4 RULES]. Materials: [PASTE NOTES OR SAY “NONE”]. Method: [STEPS TO FOLLOW, KEEP BRIEF]. Output format: [EXACT TEMPLATE]. Quality check: Critique against [RUBRIC] then revise once.

Affiliate Link

See our Affiliate Disclosure page for more details on what affiliate links do for our website.

Three quick examples on totally different tasks

Example A, writing

Use Steps 1, 2, 6 heavily. Method can be light.

Example B, debugging

Use Steps 4 and 5 heavily. Materials include logs, error messages, and the failing file.

Example C, decision making

Use Steps 1, 2, 5, and 7. Ask for options, tradeoffs, then a scored choice.

The framework stays the same. Only the emphasis changes.

Wrap-up

The reason this works is not mysterious. It is structured communication. You define the finish line, supply the right context, constrain the method, and demand a consistent output. That matches what OpenAI and Anthropic both keep teaching in their own documentation: clarity, context, and iteration are the reliable levers. (OpenAI Help Center)

When you apply Stop Guessing, Start Prompting 7-step framework that works on any task, you stop treating prompts like lottery tickets. You start treating them like repeatable instructions.